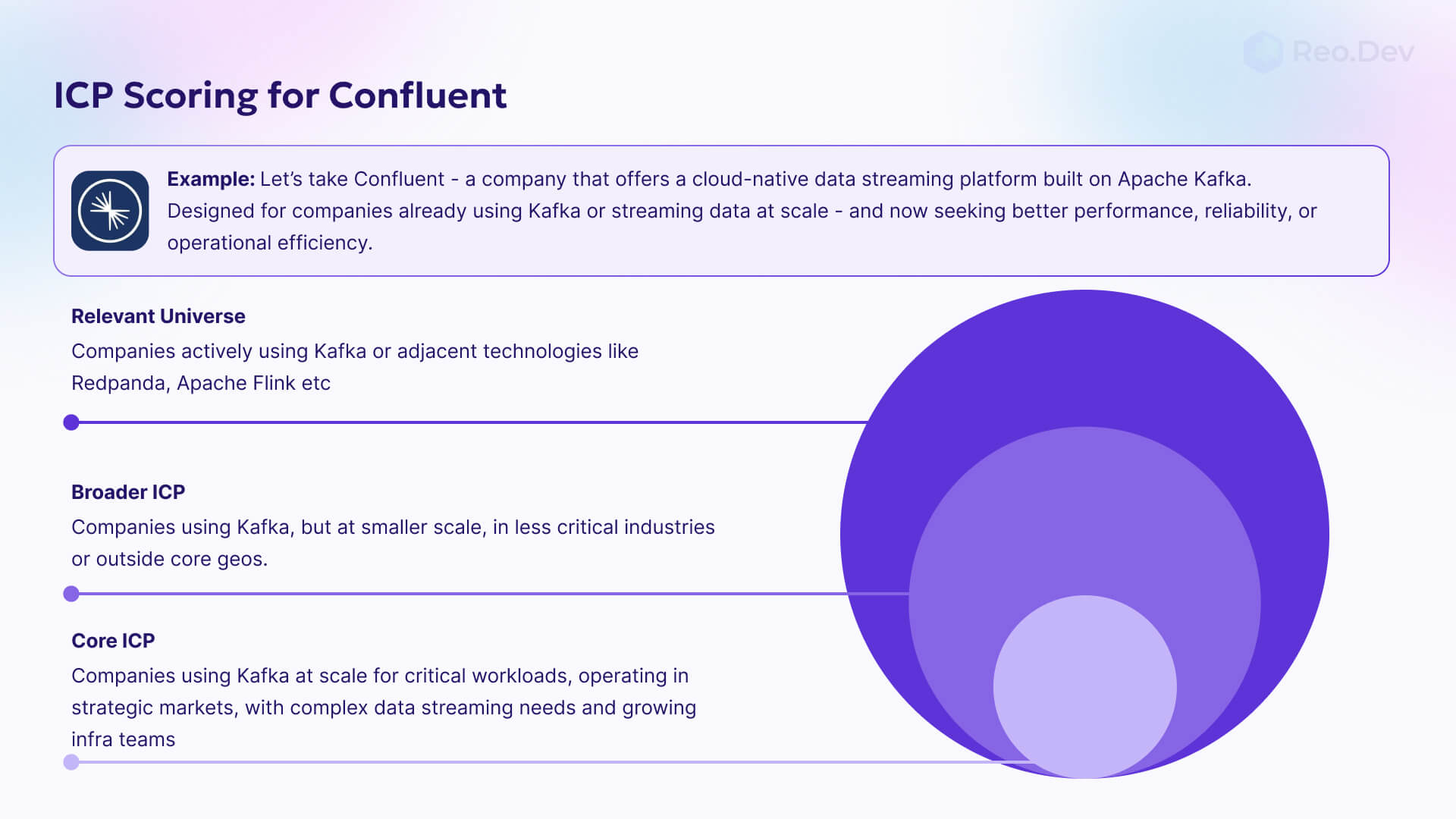

Transform your desired technology user data into actionable sales territories by combining firmographic ICP criteria with real-time technology adoption signals. Traditional account assignment based solely on company size and industry leaves money on the table—successful DevTool sales teams prioritize accounts showing active technology expansion signals.

Strategic account prioritization framework

Building an effective Total Addressable Market (TAM) requires more than basic firmographic filters. Companies using your desired technology represent varying levels of buying intent depending on their implementation stage, team growth, and technology stack evolution. Learn our complete framework for building DevTool ICP account lists to establish the foundation for strategic account segmentation.

Standard ICP criteria—geography, industry, company size, revenue—only provide baseline qualification. High-performing sales teams layer technology hiring signals on top of firmographic data to identify accounts actively expanding their technical capabilities. Companies hiring for your desired technology engineers or architects signal active investment in the technology stack, indicating higher purchase intent and budget availability.

In-market account identification and assignment

Priority account assignment should factor in recent technology hiring patterns as a proxy for market timing. Companies posting jobs for Redis engineers, Kubernetes specialists, or React developers demonstrate active technology expansion—making them significantly more likely to evaluate complementary tools within 90 days.

Our LinkedIn outreach playbook details the specific process for identifying and assigning these in-market accounts to sales teams. This approach increases meeting acceptance rates by 40% compared to generic outbound because prospects are already in active buying mode.

Territory assignment best practices

Tier 1 accounts: Companies using your desired technology with recent hiring activity for related roles. These accounts get immediate sales attention with personalized outreach referencing their specific technology initiatives and hiring needs.

Tier 2 accounts: Established desired technology users without recent hiring signals but strong firmographic fit. Assign these accounts for longer-term nurture campaigns and quarterly check-ins to monitor technology expansion signals.

Tier 3 accounts: Companies using your desired technology with weaker ICP fit or unclear expansion signals. Route these accounts to inside sales or marketing-qualified lead campaigns until stronger buying signals emerge.

Refresh account assignments monthly based on new hiring signals and technology adoption data. Companies can move between tiers quickly as their technology needs evolve, and sales territories should reflect these dynamic market conditions rather than static demographic assignments.

The combination of your desired technology usage data and hiring intelligence creates a predictive framework for sales success, ensuring your team focuses energy on accounts most likely to convert within the current quarter.